Home

Created with love by Neurotech@Davis Board Members

Welcome to the Neurotech@Davis Wiki, your comprehensive resource for all things related to neurotechnology. Here you will find learning resources, documentation and guides.

What is Neurotech?

Neurotechnology, or Neurotech for short, is a dynamic and multidisciplinary field that harnesses the power of technology to study and interact with the brain and the nervous system. It encompasses a wide range of exciting areas, including:

- Neuroimaging: Explore the world of brain imaging techniques, such as fMRI, EEG, and PET scans, that allow us to peer into the intricacies of the brain.

- Brain-Computer Interfaces (BCIs): Learn about cutting-edge technologies that enable direct communication between the brain and external devices, opening up new possibilities for communication and control. Signal Processing: Dive into the world of signal processing, where we analyze and make sense of neural data to uncover hidden patterns and insights.

- Machine Learning: Discover how machine learning and artificial intelligence are revolutionizing our understanding of the brain and its functions.

- Deep Learning: Explore the role of deep learning in decoding brain signals and advancing neuroscientific research. Neuroethical Considerations: Delve into the ethical dimensions of neurotech, including issues related to privacy, consent, and responsible data use.

How to use this Wiki?

This wiki is designed to be a collaborative space where we can collect our resources and insights and share them with you. Here's how you can make the most of it:

- Browse: Use the index on the left to navigate through the various pages. You will find sections within those pages making topics easier to find.

- Contribute: Feel free to reach out to any Neurotech officer to contribute to this wiki, we are more than happy to let you contribute. There is always something to add in the Wiki as it is always a work in progress

- Learn: Whether you're a beginner or an expert, you'll find articles that cater to your level of knowledge.

To clone this Wiki locally, enter the following command:

git clone https://github.com/Neurotech-Davis/Neurotech-Wiki.wiki.git

Development Environment

By Priyal Patel for Neurotech@Davis

Your "dev" environment consists of certain software applications that run on your local device (ex. computer). Using these applications, you can easily share code, organize your files, and write/test code. In this section, we will be covering how to use the OpenBCI GUI, Github, git, VSCode, Jupyter Notebooks, and the terminal.

What is OpenBCI?

By Priyal Patel for Neurotech@Davis

OpenBCI is a neurotech company whose hardware we use to collect brain data. Using their GUI, we are able to visualize the data in real-time and access these recordings in "./Documents/OpenBCI_GUI".

Installing GUI

Follow this guide to install the OpenBCI GUI.

Once the package appears in your “Downloads” folder, double click to open and keep clicking next button to fully install the package.

How to Use

Opening the GUI

In Finder/File Explorer, look for "OpenBCI_GUI" in Applications and then double click on the app.

If the error message below pop-ups, then right click on the app and click "open".

Starting a Secession

First, you need to have the USB dongle plugged into the device running the GUI and the cyton board turned on with batteries. See 'Data Collection Steps & Help' in Additional Resources for more information.

Then, select these options:

- CYTON (live)

- Serial (from Dongle)

- 8 CHANNELS

- OpenBCI

- File

Under "Secession Data" you can add a custom secession name.

Finally, click either "START SECESSION" or "AUTO-CONNECT".

Troubleshooting Errors

There are many reasons for why a red error message will appear when trying to start a secession.

Here are some ways to fix these errors:

-Try clicking either "START SECESSION" or "AUTO-CONNECT" at least 5 times. It usually takes a couple seconds before the connection is established.

-Check that the batteries have been plugged into the cyton board and are facing the correct direction in the holder.

-Check there is a light on the cyton board and the switch is set to "PC".

-Check that there is a red light flashing on USB dongle. If not, then switch the black reset switch to the right and then back to the left.

-Relaunch the GUI and repeat the secession starting process.

-Replace any dead batteries.

-Use another device as the GUI runs better on some devices compared to others.

Starting/Stopping a Stream

Press "Start Data Stream" button to begin recording brain data. After, press "Stop Data Stream" button to stop recording data.

See 'GUI Widgets' in Additional Resources to learn more about other GUI features.

Access Data

In Finder/File Explore, under Documents open the "OpenBCI_GUI" folder. In "Recordings", find the secession folder by the custom name you provided or by the date/time the secession was started. Inside the secession folder find all recordings based on date/time the recording was started.

Additional Resources

What is the Terminal?

By Priyal Patel for Neurotech@Davis

The Terminal is an application primarily used to easily access and edit your files using special commands. It is referred to as the Command Prompt on Windows. The commands used will differ based on the type of computer you have such as a MAC or Windows laptop. Developers also use the Terminal to install packages and run their code. The code's output or errors with how the code was written will be printed in the Terminal.

Commands

Commands are what you type after the cursor mark. Commands are structured as "[abbreviated action] [inputs]". When reading an instruction set, you can recognize Terminal commands by '$', '>', or '%' appearing before the command. To run the command, you will need to copy everything that appears right after one of these symbols into the Terminal and then hit enter. Commands are space and spelling sensitive, so you need to make sure you write each command exactly as it appears.

Path

A path is a way to represent where a folder or file is located. Each folder or file is separated by a '/'. Your desired folder or file will be the rightmost item and the folder(s) that contains this item will be located to the left; for example, "/folder/folder/file".

Absolute Path

This path starts with the root directory usually the 'Users' folder. The root directory is the folder that holds all of your folders and files; for example, "/Users/priyal/Desktop/Neurotech".

Relative Path

This is the shorthand version of the absolute path. To represent the previous folder, you can use '.'; for example, if you are currently in 'priyal' folder, then your shorthand path is "./Desktop/Neurotech".

Common Linux Commands

If you have a Windows laptop, then you will need to either install WSL before being able to run these commands or use the equivalent Windows commands. See 'WSL Installation Guide' or 'Windows Commands' in Additional Resources.

Manual Page: man

See what a command does and how to use it.

$ man <command>

Present Working Directory: pwd

See what folder you are currently in (absolute path).

$ pwd

List: ls

See the contents of the folder you are currently in.

$ ls

Concatenate: cat

See the contents of one or more files in the order specified.

$ cat <file1-name> <file2-name>

Move: mv

Move a file to a new location or rename file/keep file in same location.

$ mv <file-you-want-to-move> <new-path-or-name>

Change Directory: cd

Go from your current folder to another folder.

$ cd <absolute-or-relative-path>

The shorthand to go back to the parent folder is:

$ cd ..

Make Directory: mkdir

Create a folder with the specified name.

$ mkdir <folder-name>

Remove Empty Directory: rm -r Delete a folder and all of its contents.

$ rm -r <folder-name>

Remove Non-Empty Directory: rm -d Delete an empty folder.

$ rm -d <folder-name>

Remove File: rm Delete a file.

$ rm <file-name>

Clear: clear

Remove all commands from the Terminal's display. If you use the up arrow key, then you can still see some of the past commands used.

$ clear

Stop Execution: ^c (ctrl+c)

After running a command, you can use this shortcut to prevent the command from finishing execution.

$ <command>

^c

For a full list of commands, see 'Unix Tutorial' or 'Linux Commands Cheat Sheet' in Additional Resources.

Additional Resources

What is Jupyter Notebook?

By Priyal Patel for Neurotech@Davis

Jupyter Notebook is a web application that allows you to easily write, run, and visualize smaller chunks of code. It is commonly used for data preprocessing and analysis.

Installing Jupyter Notebook

First, install python by downloading newest release and choose the version where the operating system matches the device you are using (ex. macOS for a MAC).

After, install pip using either method mentioned (ensurepip or get-pip.py).

Finally, open the Terminal (Mac/Linux) or Command Prompt (Windows) and run this command:

$ pip install notebook

See 'Anaconda Navigator' in Additional Resources for an alternative installation method.

How to Use

Opening Jupyter Notebook

To open Jupyter Notebook, run this command:

$ jupyter notebook

Make sure the Terminal window that you used to run this command stays open the whole time you are using Jupyter Notebook.

Files and Folders Structure

The Files section will look very similar to Finder (Mac) or File Explorer (Windows). If you select the box next to a folder/file, then click on an option this change will also automatically apply to your Finder/File Explorer. For example, if you delete a file, then you will need to go to your trash to recover it.

Creating a Notebook

Navigate to the folder where you want the notebook to be stored. Click "New" and select "Python 3". The new notebook will open in a new tab. If you navigate back to the folder, then a .ipynb file will have been added.

Press Save

The most important thing to remember is to periodically press the "save" button especially before closing the notebook or your laptop. After pressing the button, make sure a checkpoint created message appears.

Cells

Each box is called a cell. Using cells allows you to split up your code into multiple cells and add markdown cells to provide written explanations. Cells can be run in any order; however, if certain code requires an imported package, then the cell importing the package needs to be run first.

To add a cell, either click on "+" or select an option under "Insert". Use the dropdown to specify what type of cell (ex. code).

More operations with cells can be found under "Edit".

To run a cell, either click on "> Run" or select an option under "Cell".

See the 'Beginner Tutorial' in Additional Resources for more features and how to use them.

Closing Jupyter Notebook

Close all tabs in your browser that are running Jupyter Notebooks. Then, type (ctrl+c) and after 'y' in the Terminal used to open Jupyter Notebook:

^c

y

Additional Resources

What is VSCode?

By Priyal Patel for Neurotech@Davis

VSCode is an IDE, integrated development environment. What this means is it combines various applications that run separately into one application.

VSCode is just one option, so feel free to use your preferred IDE.

Installing VSCode

Download the application on to your device.

How to Use

In Finder/File Explorer, under Applicantion find "Visual Studio Code" and click to open.

Review the 'Getting Started Guide' in Additional Resources.

Key Features

File Tree

Allows you to see, edit, and easily move all sub-folders and files in your project.

Terminal

The terminal is easily accessible from within VSCode. This allows you to run your code faster and easily check-in your code using git; the next section will provide more information about git.

Extensions

Also known as plugins, Extensions allows you to install packages. These could be necessary dependencies for your code or tools to help make the process of writing code easier. Feel free to explore the plugins available.

Here are some useful plugins:

WSL

For Windows users, this plugin will allow you to use linux commands in the terminal.

Live Share

This plugin allows multiple people to edit the same files in real time.

See the 'Live Share Guide' in Additional Resources for more information.

Prettier

When you click save, this plugin automatically formats your code to match standard coding formatting practices. This allows for consistent formatting and helps with make your code more readable.

Additional Resources

What is Github?

By Priyal Patel for Neurotech@Davis

Github is a web application that stores multiple versions of a project. This allows multiple users to make changes to the project at the same time. The application also has features that allow users to easily integrate all of their changes into one project.

Repository

A repository stores all files and folders needed for a project. You can also see the project's revision history.

Branches

One way to maintain multiple versions of the project is by using separate branches. The branch that holds the desired version of the project is called Main; this will be automatically created when you create a repository.

Users are able to make a new branch that contains a copy of the content in the Main or any other branch. This also called forking a respository.

Pull Request

Users can integrate their changes in their branch into another branch by opening a pull request.

Clone Repository

To make changes, a user will need to clone the desired repository. This will create a copy of the repository on your device. You will need to use git (mentioned below) to download changes from other users and upload your changes.

README

Users will add any important information about your project using markdown to the README.md file.

How to Use

Creating an Account

Follow Part 1 of this guide to setup your github account.

See the 'Github Hello World' guide in Additional Resources to practice using the features mentioned above.

What is Git?

Git is the version control software that interacts with Github (repository storing service). Git is a tool that allows users to interact with different versions of the project. Using Git, users can save the changes on their device, upload their changes to the Github repository, switch to another version of the project, and perform many more tasks.

Remote Repository

The repository that appears on Github. This respository is stored on Github servers.

Local Repository

The repository that stored on your device. It consists of everything in the .git directory.

Staging Area

The staging area consists of the files you want git to track. You must explicitly add these files to the staging area each time you want git to know about new file edits.

Working Directory

This is the folder on your device that holds all files and folders in your local repository.

All of your changes are only stored here until you add them to the staging area, commit them to the local repository, or push them to the remote repository.

See 'Medium on Git' in Additional Resources for more information.

Installing Git

Download the git version that matches your device.

How to Use

This tutorial will be using VSCode and show you how using VSCode will make the process of using git easier. The tutorial will be only using the MAIN branch, so see 'Git Branches' in Additional Resources for using multiple branches.

Setting Credentials

Follow this guide to set up git and link your account.

Create a Respository

On Github:

- Sign into your account

- Click on your profile

- Click on 'Your repositories'

- Click on the green button 'New'

- Give your repo a name

- Select public or private

- (Optional) Select 'Add a README file'

- Click 'create repository'

Cloning a Repository

You can either clone an existing repository or create a new one and then clone this repo. For private repositories, you can only clone them if you are added as a contributer. See 'Adding Contributors' in Additional Resources.

Go to your repository and click on the green 'Code' button. Copy the link under 'HTTPS'.

In VSCode terminal run:

$ git clone <https-repo-url>

Git Changes Workflow

After making changes to a file, the first step is to make sure you get any new changes another user has made on the same branch. If you do not do this, then you will overwrite the changes that other users have made. :(

$ git pull

If you have merge conflicts, then see 'Resolving Merge Conflicts' in Additional Resources.

Next, you will need to add the changes to the staging area. Make sure you are in the parent folder that has all your changes (hint: use cd terminal command to navigate to the correct folder).

$ git add .

(Optional) To see what files have been added to the staging area, you can run this command:

$ git status

Then, you will need to save a copy of all these changes to your local repository (.git).

$ git commit -m "<any-description-of-changes>"

Finally, you can move the changes from your local repository to the remote repository on Github.

$ git push

The first time you run this process you will need to run this command instead (default branch name is MAIN):

$ git push --set-upstream origin <branch-name>

See 'Git Workflow' in Additional Resources for more information about these steps.

Additional Resources

- Github Hello World

- Medium on Git

- Git Branches

- Git Cheat Sheet

- Git Workflow

- Adding Contributors

- Resolving Merge Conflicts

Fun Fact

This WIKI is hosted in a remote repository called 'Neurotech-Wiki' and all my changes were added using git.

Python Basics

By Dhruv Sangamwar for Neurotech@Davis

Python is a versatile and popular programming language known for its simplicity and readability. In this tutorial, we will cover the basics of Python, including variables, data types, control structures, functions, and more.

Table of Contents

- Table of Contents

- 1. Getting Started

- 2.Variables and Data Types

- 3. Control Structures

- 4. Functions

- 5.Modules and Packages

- 6. File Handling

- 7. Error Handling

- 8. Object Oriented Programming

- 9. Conclusion

1. Getting Started

1.1. Installing Python

Before you can start programming in Python, you need to make sure you have a text-editor and the right environment.

MacOS

- We personally recommend using Homebrew, a package manager that handles package download and installations

- Follow the following commands to install , python and some libraries.

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install.sh)": Installs Homebrew.

export PATH="/usr/local/opt/python/libexec/bin:$PATH": This sets up the Path variable so that your command line can find python. The following commands will install python along with some libraries:

brew install python

python --version

pip install numpy

pip install seaborn

pip install matplotlib

We strongly reccomend that you use Visual Studio code as it has plenty of free plugins that make development in python easier.

If you want to use any additional packages, visit PyPi. This is the python package index where you can find installation instructions and documentation for packages. (Make sure to check which version you want before installation)

Windows

- Environment setup has a couple more steps on Windows

- Open command prompt as an administrator

- Run

wsl --installto install WSL2.- Restart your computer after the step.

- At this point you should be able to run

wslin your command prompt which should start a linux vm.- If this is not the case please reach out to anyone on the projects division to debug platform related issues.

- You can now use the same commands as above for MacOS to install python and all the related dependencies

- Install Visual Studio code and run

code .in your command prompt;code .should open up VScode where you can write all your python code.- WSL should detect that you are trying to open VScode, it will install the virtualized version of it automatically and open the desktop VScode client.

1.2. Running Python

You can run Python code in two ways: using the Python interactive interpreter or by creating Python scripts.

To start the Python interactive interpreter, open your terminal or command prompt and type:

python

You can also run Python scripts by creating a .py file and executing it with the python command.

# hello.py

print("Hello, Python!")

To run the script:

python hello.py

2.Variables and Data Types

In Python, you don't need to declare the data type of a variable explicitly. Python infers it based on the value assigned.

# Integer

x = 10

# Float

y = 3.14

# String

name = "Alice"

# Boolean

is_python_fun = True

# List

fruits = ["apple", "banana", "cherry"]

# Tuple

coordinates = (3, 4)

# Dictionary

person = {"name": "Bob", "age": 30}

3. Control Structures

Python provides various control structures like if, for, and while for managing program flow.

3.1. Conditional Statements

if condition:

# Code to execute if the condition is True

elif another_condition:

# Code to execute if the second condition is True

else:

# Code to execute if none of the conditions are True

3.2. Loops

3.2.1. for Loop

for item in iterable:

# Code to execute for each item in the iterable

3.2.2. while Loop

while condition:

# Code to execute as long as the condition is True

4. Functions

Functions allow you to encapsulate code into reusable blocks.

def greet(name):

"""A simple greeting function."""

print(f"Hello, {name}!")

# Calling the function

greet("Alice")\

5.Modules and Packages

Python has a rich ecosystem of modules and packages to extend its functionality. We installed some earlier if you followed the getting started section.

# Importing a module

import math

import numpy as np

# Using a module function

print(math.sqrt(16))

# using numpy

arr = np.array([[1, 2],

[3, 4]])

print("Matrix: \n", arr)

6. File Handling

Python provides functions for reading from and writing to files.

# Opening a file for reading

with open("file.txt", "r") as file:

content = file.read()

# Opening a file for writing

with open("new_file.txt", "w") as file:

file.write("Hello, World!")

7. Error Handling

Use try and except blocks to handle errors gracefully.

try:

# Code that might raise an exception

except ExceptionType:

# Code to handle the exception

else:

# Code to execute if no exception is raised

finally:

# Code that always runs, regardless of exceptions

8. Object Oriented Programming

Python supports object-oriented programming (OOP) principles like classes and inheritance.

class Animal:

def __init__(self, name):

self.name = name

def speak(self):

pass

class Dog(Animal):

def speak(self):

return f"{self.name} says Woof!"

# Creating objects

dog = Dog("Buddy")

print(dog.speak())

9. Conclusion

This Python tutorial covered the fundamental concepts of the Python programming language, including variables, data types, control structures, functions, modules, file handling, error handling, and object-oriented programming. Python is a versatile language with a vast ecosystem of libraries and frameworks, making it suitable for your Neurotech projects.

Inportant Python Libraries for Data Analysis

By Manik Sethi for Neurotech@Davis

Now that we've covered the basics of Python, we will dive into libraries which give us additional functionality. In this tutorial, we will cover NumPy and Pandas . To follow this tutorial, make sure you have Python installed on your computer and a text-editor ready to work with.

Table of Contents

GOAT STATUS

1. Getting Started

1.1. Making Sure You Have PIP

Since libraries are add-ons to the basic version of Python, we need to install them onto our machines. We will be using the package installer for Python, which is called pip. First, we will ensure that the machine you're working on has pip. To do so, open up a terminal window and type the following command:

MacOS

python -m ensurepip --upgrade

Windows

py -m ensurepip --upgrade

Typically, pip comes with the installation of Python in your system. But we will run these commands as a preventative measure for any errors down the line.

Now, we will install the packages onto our system. Enter the following terminal commands. 1

pip install numpy

pip install pandas

1.2. Importing Libraries

Now that we have our libraries installed, we can call upon them in. our .py files. To use the functionalities of our library in a given .py file, we type this at the very top.

import numpy as np

import pandas as pd

Let's break down these lines phrase by phrase

importfollowed by the library name tells our code to load in the library, allowing us to access its functions and variables.- Using the

askeyword let's us shorten our library name and give it a nickname 2. That way, instead of having to call functions usingnumpy.function(), we can just donp.function()

2. NumPy

Now that we have imported NumPy, let's access and use it's functions and variables. For the sake of being concise we won't cover everything, but here is the documentation.

2.1. Array Fundamentals

Arrays will be incredibly useful for neurotech applications. All the brain data we will collect needs to be stored, and the np.array() datatype allows us to do so while also providing high functionality. Let's start by creating an array, which is just a list of objects.

Input:

import numpy as np

a = np.array([1, 2, 3, 4, 5, 6])

a

Output:

array([1, 2, 3, 4, 5, 6])

We used the np.array() function which takes in one argument: a list. However, this is just a one dimensional list, also known as a vector. In our use cases, it may be useful to have list of lists, also known as matrix. We can initialize one as shown below

matrix = np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

NumPy also comes with functions that return attributes of our array

matrix.ndimreturns number of dimensions of the array. Since we made a 2D matrix, it'd be2matrix.shapereturns the shape of our array, think of this as the length and width of our array, which is(3,3)matrix.sizetells you how many total elements exist in our array, which in our case is9

If we used basic Python lists, we'd have to define these shape, size, and other functions on our own which becomes redundant quickly. NumPy takes care of this and lets us work on the important parts of the project.

2.2. Indexing and Slicing

The data we collect will also need to be manipulated. Thankfully, NumPy takes care of this. Sometimes we only want to work with specific parts of our data because of it's temporal importance (when it happened) or spatial importance (where it happened)

import numpy as np

...

data = np.array([[1, 7, 3, 9],

[2, 5, 0, 4],

[7, 3, 4, 1],

[8, 6, 0, 2]])

data[0]

data[2][1]

data[3]

data[5][0]

The integers inside the [] such as [0] represent the index of the element to retrieve. Since Python is 0-based, [0] is the first element in the array. Therefore, [2][1] is the first element of the second element! Here is a great resource for Indexing in Python. As practice, guess what the previous code snippet will return. The answer will be in the footnotes 3

Slicing lets us manipulate our matrix by cutting out specific parts. We will continue using the data variable from the previous example. If we want to access the first two objects inside data, we can write out the following line of code:

data[0:2]

the [0:2] lets our computer know we only want objects from the 0th index, up to the 2nd index (exclusive4). Here are the rules for slicing:

data[x:y]returns an object with elements from the xth index to yth indexdata[x:]returns an object with all elements starting from the xth indexdata[:y]returns an object with all elements up till the xth index

2.3. Reshaping and Flattening

Sometimes we need to reshape our data to execute certain operations on it. NumPy comes with a .reshape() function which takes in two arguments, the number of rows and columns

Input:

a = np.array([1, 2, 3, 4, 6])

b = a.reshape(3, 2)

Output:

array([1, 2, 3, 4, 5, 6])

array([0, 1],

[2, 3],

[4, 5] )

We reshaped our flat array a to have 3 rows and 2 columns. NumPy also has a function to go from an arbritary shape array back to a flat array.

Flattening our data gets rid of the dimensionality, and turns our higher dimensional data into a 1-D vector.

Input:

c = b.flatten()

Output:

array([1, 2, 3, 4, 5, 6])

No parameters are needed, since it will always turn the array back into a one dimensional list.

Reshaping and flattening are crucial operations for making sure our data is compatible with certain operations, such as matrix multiplication. If the dimensions don't match, we can reshape our data to fix it.

3. Pandas

Moving on, we will cover the basics of pandas. Once again, here is the link for the comprehensive documentation

3. 1. Dataframes

Pandas is used for two-dimensional tabular data, such as data stored in spreadsheets. The most common datatype we work with in pandas is called a DataFrame. Let's make one right now.

import pandas as pd

df = pd.DataFrame(

{

"Name": ["Avni", "Aryaman", "Sofia", "Manik", "Grace", "Priyal"],

"Sex": ["female", "male", "female", "male", "female", "female"],

}

)

Here is a table of the current projects division board members. If we print it out, we would get the following |index|Name|Sex| |---|---|---| |0|Avni|female| |1|Aryaman|male| |2|Sofia|female| |3|Manik|male| |4|Grace|female| |5|Priyal|female|

Similar to NumPy, pandas has functions and indexing features allowing you to return rows and columns.

df["{column_name}"]returns the column corresponding to the column namedf.iloc[x:y]returns the rows ranging from indexxto indexy(exclusive)

3.2. Reading and Writing

Most likely, you will be creating dataframes with pre-existing csv files from data collection. Luckily, Pandas supports the ability to read from these files

data = pd.read_csv("directory/data.csv")

The variable data has a type DataFrame, which means all the previous functions we used can be applied here as well.

data.head(x)will display the firstxrows of the dataframedata.tail(x)does the opposite and displays the lastxrows of the dataframedata.shapereturns the shape of our dataframe

To write out the tabular data we have manipulated, we can use the function

df.to_csv("board_members.csv", sheet_name="board")

Here is a link for a more comprehensive guide towards dataframe indexing

4. Conclusion

This Python tutorial covered important libraries that will be relevant to your work in signal pre-processing and manipulation. Libraries such as NumPy and Pandas come with a vast amount of functionality, allowing users to focus on meaningful work, and generate results quickly.

sometimes python decides to be funny and requires you type pip3 instead of just pip. Please be mindful of what python version you have installed. If it's Python3, do the former.

2: When choosing a nickname, make sure it's intuitive as to what library it's referring.

3: [1 7 3 9], 3, [8 6 0 2], IndexError. An IndexError occurs because there is no value at the [5] index of our variable.

4: By exclusive, we mean that data[0:2] will not include the element at the 2nd index

Overview of Neuroscience and EEGs

By Aryaman Bhatia and Grace Lim for Neurotech@Davis

Here, we discuss the underlying neuroscience related to EEGs and Event related potentials, and learn about the electrical knowledge behind EEGs.

What is EEG?

EEG stands for Electroencephalogram, which is a method for recording electrical brain activity. Like the name suggests, EEG uses electrodes (for our purposes, on the scalp), to pick up neural activity from a local cluster of neurons beneath the skull. This neural activity is thought to be is specifically generated from post-synaptic potentials from pyramidal cells. These post-synaptic potentials along the cortex create a summation of dipoles which are picked up from the electrodes, which gives rise to the voltage recorded from the scalp. This works in a way of volume conduction, where this propagation of current causes this instantaneous voltage (Luck, 2014, Chapter 2).

Strengths and limitations

The main strengths for EEG are its good temporal resolution, accessibility, and non-invasiveness (for scalp EEG). For people doing EEG-based projects, the main draw should be the use of temporal resolution (e.g, measuring latency in responses or waveforms, in relation to brain activity). However, there are quite a few relevant limitations to EEG; those being its spatial resolution and noise. Spatial resolution refers to our ability to localize activity from the brain. If temporal resolution is the “when”, then spatial resolution is the “where”. The other drawback is noise. Since EEG is picking up electrical activity from our brain, you can imagine all the other kinds of background electrical that can contaminate your data. For example, the most obvious would be the 60Hz line noise if you are in the US. Knowing this, there are several ways to clean up your data, which we will explain in later articles or in the suggestions for further reading.

Active, Reference, Ground

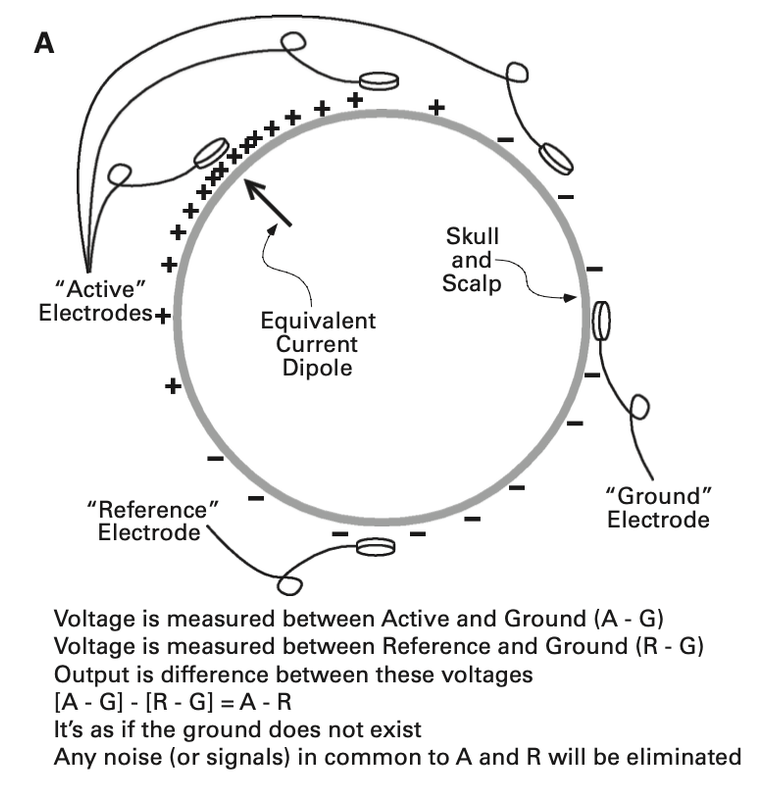

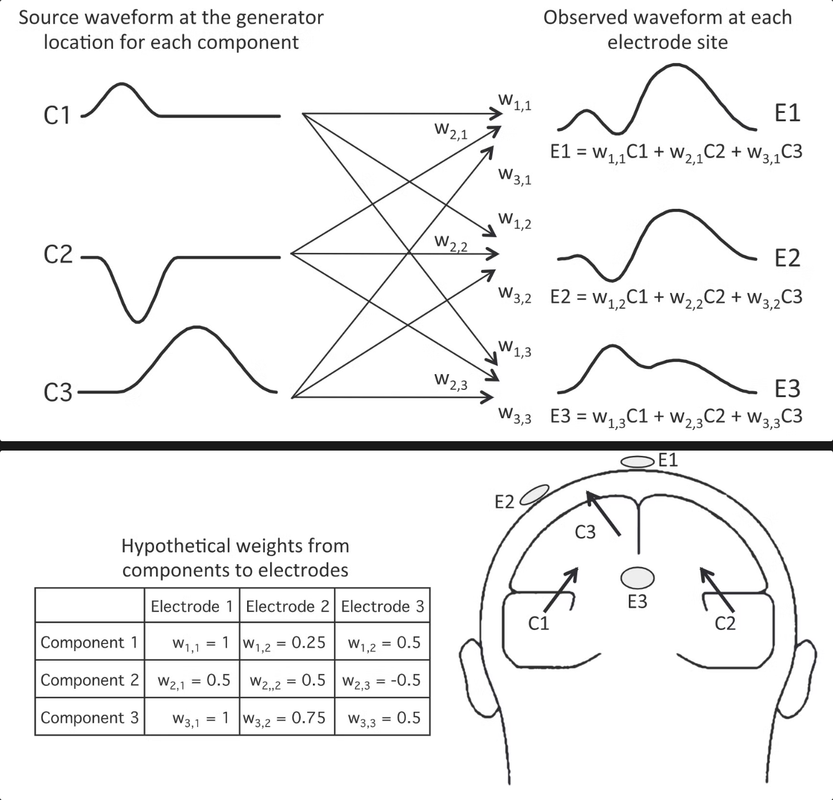

The active, reference, and ground are three different types of electrodes that are combined to provide a single channel of EEG. This is because EEG is always recorded as potential for current to pass from one electrode (active) to usually a ground electrode (Luck, 2014, Chapter 5). If this concept of electricity and magnetism is confusing, I highly recommend reading Luck’s second chapter “A Closer Look at ERPs and ERP Components”. The term “absolute voltage…refer[s] to the potential between a given electrode site and the average of the rest of the head”, (Luck, 2014, Chapter 5). What this means is that the potential between the active electrode and the ground electrode is simply the difference between these two absolute electrodes. If you are familiar with basic physics, then you can understand why there is no voltage at a single electrode: you have to have voltage from two sources for everything because voltage is the potential from one source to another.

For some background when describing the reference electrode, it is important to know that EEG recording systems typically solve the problem of noise in the ground circuit by using what are called differential amplifiers (Luck, 2014, Chapter 5). The reference electrode, in theory, is supposed to be away from the brain activity we are directly measuring, using it to subtract from each active and ground electrode. This makes sense practically, as we are creating a sort of baseline for recording neural activity in order to get rid of any common noise. Here is a section from one of the figures used from Luck in the fifth chapter, that helped me understand this concept:

The purpose of the ground electrode can be described as “when voltage is initially measured between two electrodes, one of the two electrodes must be connected to the amplifier’s ground circuit, and this circuit picks up noise from the amplifier circuitry”, (Luck, 2014, Chapter 5). You also may now be wondering why we can’t record the voltage directly between the active and the reference, but the reasoning for this is because “without connecting R to the ground circuit (in which case it would simply be the ground electrode). Thus, most systems use a differential amplifier to cancel out the noise in the ground circuit”, (Luck, 2014, Chapter 5).

To tie all these concepts together, you should know that “the electrical potential between the subject’s body and the amplifier’s ground circuit is called the common mode voltage (because its common with the active and ref electrodes)” and that “to achieve a clean EEG recording, this common mode voltage must be completely subtracted away”; however, this is not as perfect in implementation (Luck, 2014, Chapter 5).

For practical implementation of these ideas: the location of the ground electrode is trivial, so feel free to place it anywhere on the head. However, the site of the reference electrode requires a little more thinking. Luck provides some advice: “given that no site is truly electrically neutral, you might as well choose a site that is convenient and comfortable”, “also close to the site of interest and one that other researchers use”, and “you want to avoid a ref site that is biased toward one hemisphere”, (Luck, 2014, Chapter 5). I would recommend for our purposes to place your reference on the electrode on the mastoid(s) to stay consistent with other researchers.

ADC

As you may know, your brain waves are a continuous process (I would hope so), so we call this an analog signal. When we record brain activity through the EEG, the computer is undergoing a process called digitizing the EEG. This means “the EEG is an analog signal that varies continuously over a range of voltages over time, and it must be converted into a set of discrete samples to be stored on a computer”, (Luck, 2014, Chapter 5). You can think of these samples as a point in time. This is where the principle of sampling rate comes in: “The continuous EEG is converted into these discrete samples by a device called an analog-to-digital converter (ADC)” and “the sampling period is the amount of time between consecutive samples (e.g., 4 ms), and the sampling rate is the number of samples taken per second (e.g., 250 Hz)” (Luck, 2014, Chapter 5). 1 Hz is equivalent to 1 cycle per second, you can do the math! Another important concept to understand when talking about sampling rate is the Nyquist Theorem: “which states that all of the information in an analog signal such as the EEG can be captured digitally as long as the sampling rate is more than twice as great as the highest frequency in the signal” (Luck, 2014, Chapter 5). The reason this is important, is because of aliasing: if you sample at lower rates, you will induce artifactual low frequencies in the digitized data. If this is confusing, think of a real world example of aliasing: car tires turning backward when going fast bc our eyes aren’t sampling fast enough.

What is an event related potential?

An event related potential, as the name suggests, represents changes in electric potential in response to an event, for example a light flashes to the test subject as part of an experiment, which are caused by a neural site in the brain.

More formally, an event related potential, or ERP, is defined in (Luck, 2014) as follows. “An ERP component can be operationally defined as a set of voltage changes that are consistent with a single neural generator site and that systematically vary in amplitude across conditions, time, individuals, and so forth. That is, an ERP component is a source of systematic and reliable variability in an ERP data set.”

This means that if an ERP component exists, it can be consistently seen occur in other experiments, with slight changes depending on the conditions, time, individual etc, caused by the same neuroanatomical site.

How are ERPs generated?

Now let’s look at the underlying biology of how ERPs are generated. Most ERPs are generated as a result of postsynaptic potentials. Postsynaptic potentials are the voltages that occur when neurotransmitters bind to the receptors of postsynaptic cell.

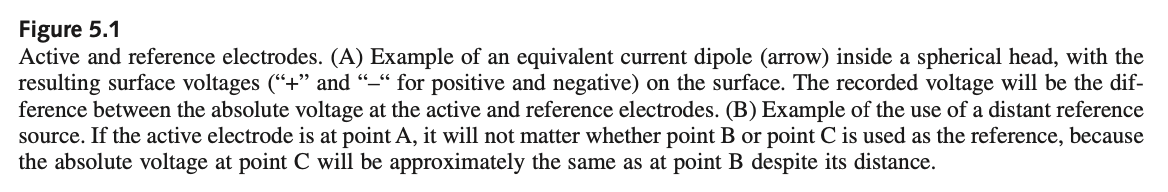

Mainly, ERPs are resulted due to pyramidal neurons, which are the most populous cells of the excitatory type of cells brain. The following is a diagram showing the structure of the same.

When an excitatory neurotransmitter is released, electrical current in the form of positively charged ions will flow from the extracellular space into the cell, making a negative charge in the region of the apical dendrites. To complete the circuit, current flows out of the basal dendrites, creating a dipole, which refers to (https://byjus.com/question-answer/what-is-an-electric-dipole/) a pair of electric charges of equal magnitude but opposite sign, separated by some (usually small) distance. (When the neurotransmitter is inhibitory, the flow of current is opposite, so the polarity of the recorded signal is the opposite. But as polarity usually doesn’t tell us much, it is not something you have to worry thinking about.) Put simply, it is the summation of multiple of these dipoles from around the brain, when certain conditions are met, that produces the measurable voltage in the EEG.

Large numbers of neurons must be activated at the same time. • The individual neurons must have approximately the same orientation. • The postsynaptic potentials for the majority of the neurons must arise from the same part of the neurons (either the apical dendrite or the cell body and basal dendrites). • The majority of the neurons must have the same direction of current flow to avoid cancellation.

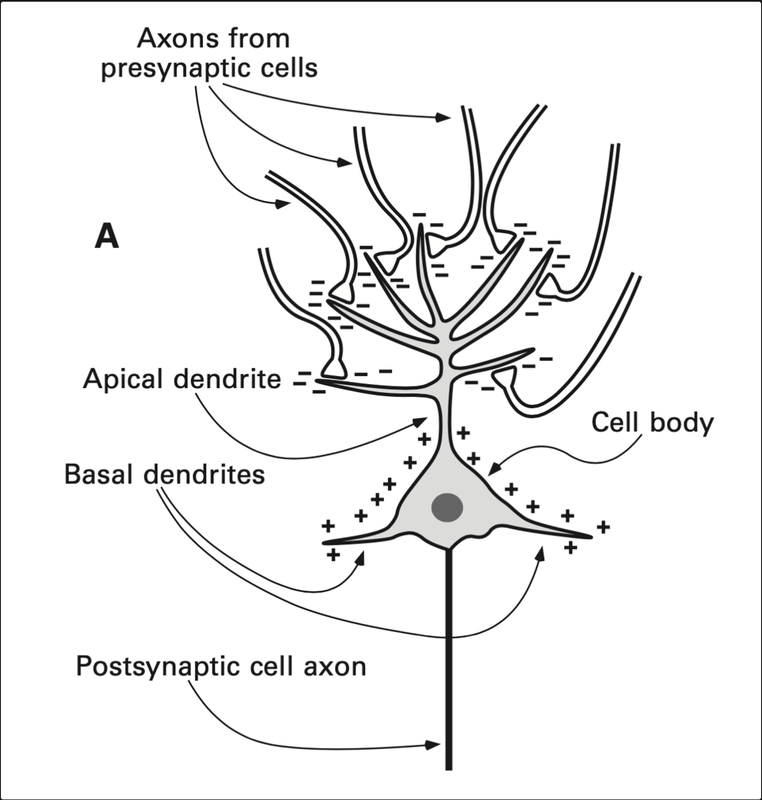

When you look at an ERP waveform, it is actually the weighted sum of multiple components in the brain. By weighted, we mean that each component waveform has a weighting factor, that is determined based on the location from where it is coming, the orientation of the dipole and the conductivity of tissues that form the head. The following diagram illustrates this:

Here, C1, C2, C3 represent the represent the waveforms at different locations in the brain. Each of them is then multiplied by the corresponding weights, and then all are summed up to give us the waveform that is picked up by the electrodes at their respective site.

While this example shows three components, in general the voltage at a given electrode site is the result of almost all the underlying components in the brain. Electrodes can pick up dozens of components in the brain during recording. There is no foolproof way of identifying which exact components result in the observed waveform. This is called the superposition problem.

All in all, when looking at your waveforms in the OpenBCI GUI, know that they are the result of the weighted sum of multiple other electrical signals in and around the electrode site, and not the singular exact signal of that site.

ERP components

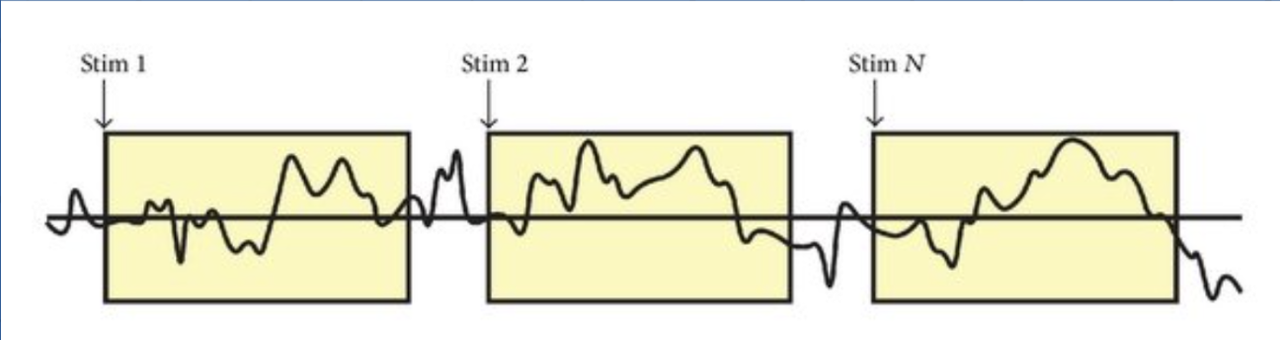

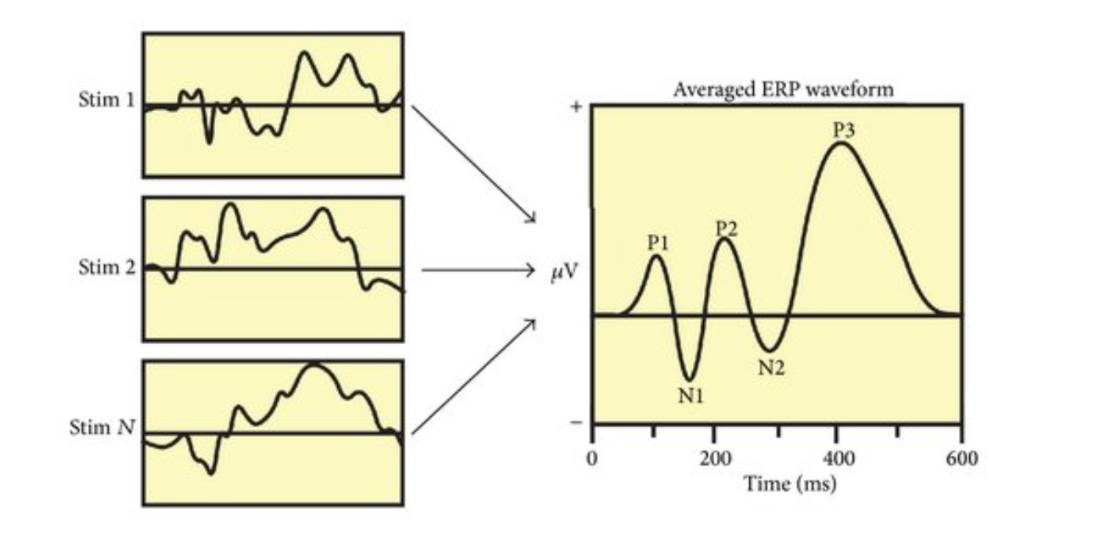

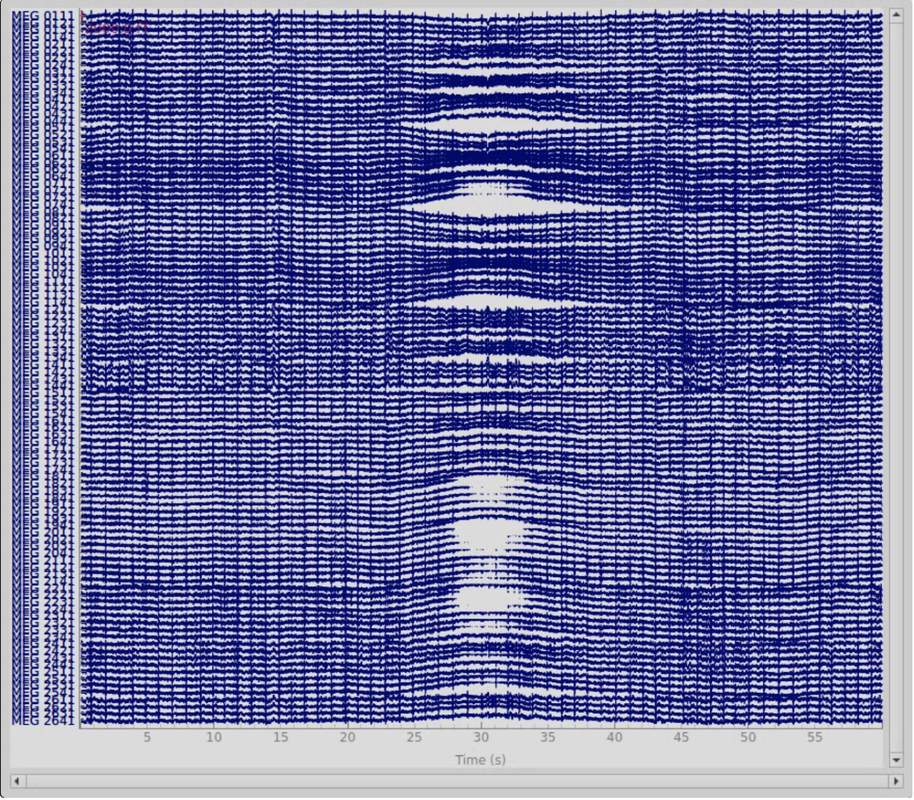

First, a participant is shown a stimulus.

We see here that each time the participant is shown that stimulus, the corresponding brain waves look very different each time. This is because the brain is doing many things at one point in time, and the EEG records all of it. So each of these segments represent the combined brain activity of everything it is doing in that moment.

So how do we isolate the brain activity for just the stimulus? For that, we do something called averaging.

Here, we take all the segments and average them out, which removes the other brain activity, leaving behind the consistent brain response that our brain has for that stimulus. ERPs are small relative to the noise level and many trials are usually required to accurately measure a given ERP effect. You must have usually 10 to 500 trials per condition in each subject to achieve sufficient statistical power.

Some examples of some common ERP are the P300 and N170. This is a positive deflection in the EEG signal that occurs about 300ms (approximately) after a stimulus. It's associated with decision making, attention, and memory processing. The P300 is often studied using the "oddball paradigm". This is an experimental design where participants are presented with a sequence of stimuli where one type of stimulus (the "oddball") occurs infrequently among more common stimuli. When participants detect the oddball stimulus, it typically elicits a larger P300 response, reflecting the allocation of attention to the rare event.

The N170 is a negative deflection occurring about 170ms after stimulus onset. It's particularly responsive to face stimuli and is thought to reflect early stages of face processing.

It is important to note, however, that not all mental processes have an ERP signature. This can be because the signal may be too deep in the brain to be picked up by surface level electrodes, or the neural process occurs too fast. Hence, it may be difficult to find any clear contribution to the scalp-recorded voltage.

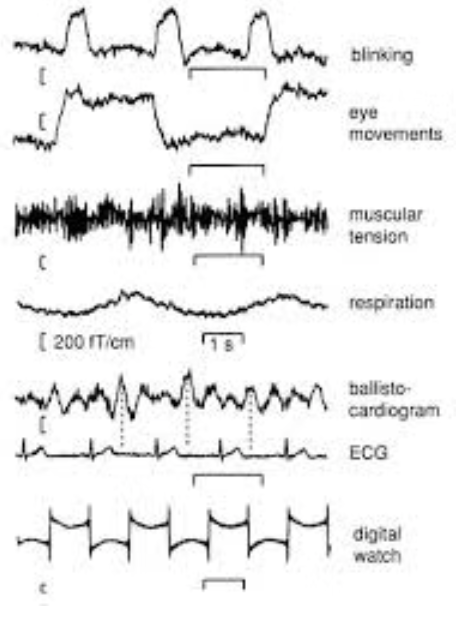

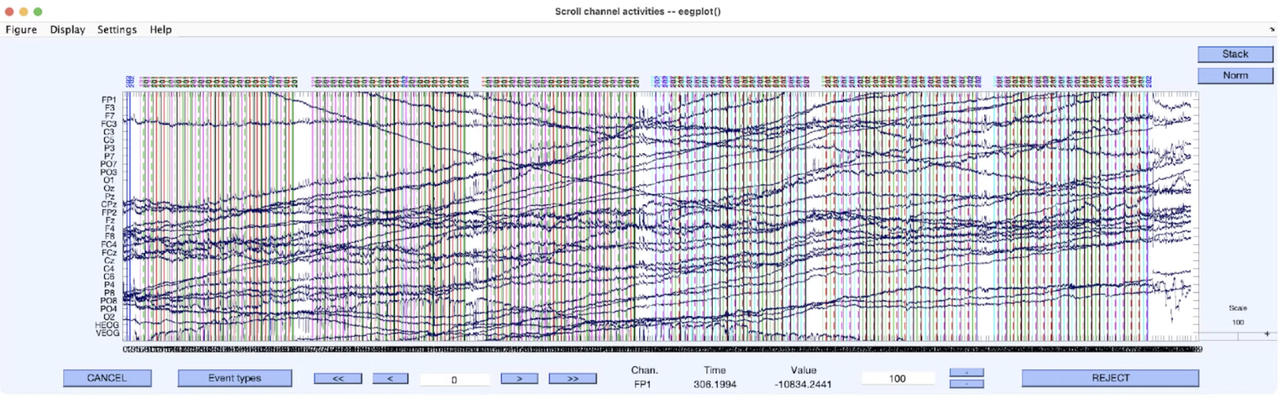

Cleaning your data

When you have finally collected all your data, your data will consist of both EEG data and unwanted electrical data not related to your experiment. This could include things such as electrical signals from lights and computers, eye blinks, muscle activity, etc. which are unrelated to your experiment. These are called artifacts.

It is important to get rid of these as they can cause unwanted peaks and distortions to your data, make it unreliable to find the actual ERP from your experiment.

There are two methods for dealing with artifacts: artifact rejection and artifact correction. Artifact rejection involves identifying and removing segments of data that contain artifacts. Artifact correction attempts to remove the artifact while preserving the underlying neural signal. There are multiple ways to do this, and one that has been discussed below is Independent Component Analysis. There are other methods which have been linked.

Blinks

A very common artifact is blinking. Each eye has a constant electrical potential between the cornea and the retina. The voltage recorded from electrodes near this site are called electrooculogram, or EOG as you may see labelled in MNE. When the subject blinks, this potential is changed, causing the artifact.

Blinking is also a good way to make sure your headset is recording data. Tell your subject to blink rapidly, and some very obvious deflections should occur. You can also do jaw clenches.

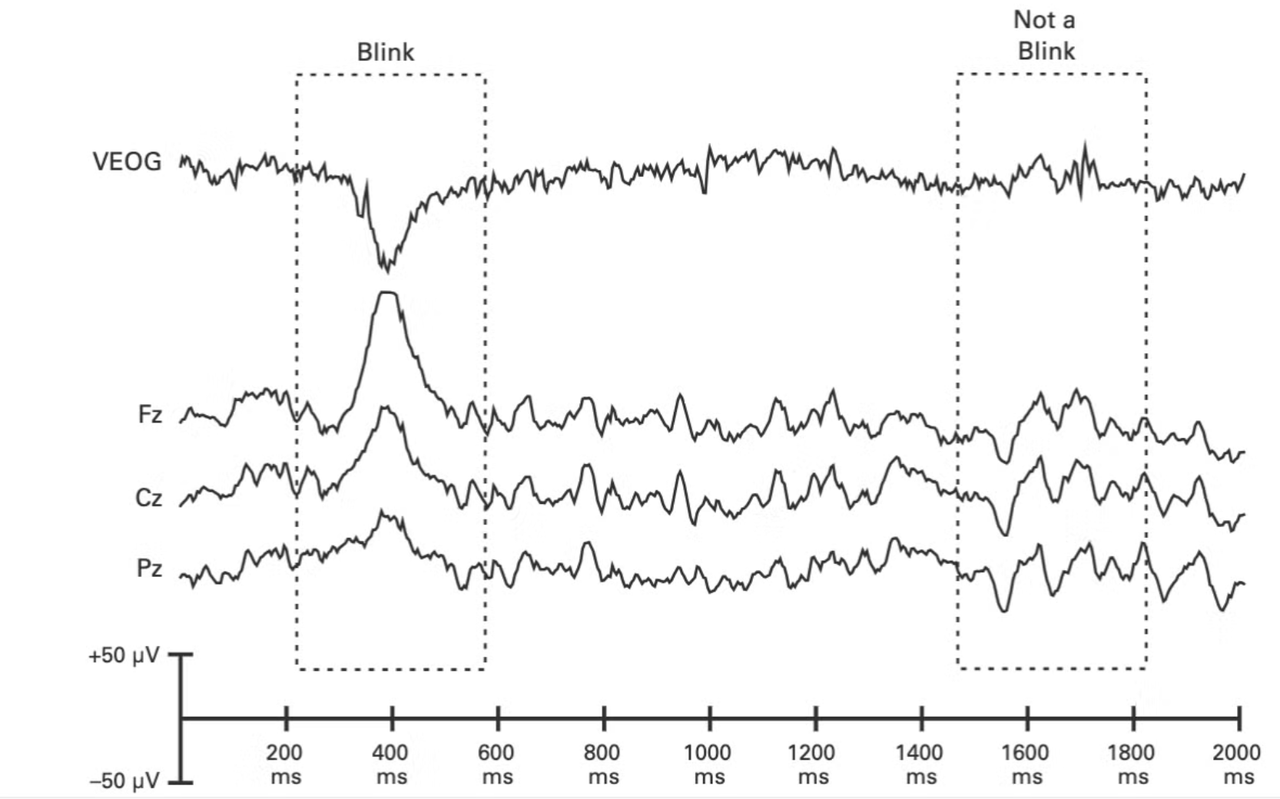

Blink potentials are such that they have negative polarity under the eye, and positive polarity over the eye. Here, you can see the negative polarity in the vertical EOG and see the difference between a blink and not a blink.

Since there is a clear negative polarity, one method of removing these artifacts is to conduct two separate recordings, one with the electrode below the eye and one above. You can then subtract the lower -minus the upper (lower - upper) and see the eye blink to see them even more clearly.

In MNE, you can also use the functions create_eog_epochs() which takes the artifacts and puts them into epochs. You may need to fine tune the functions parameters before using it, so be sure to have a look at the documentation in case you don’t. https://mne.tools/stable/auto_tutorials/preprocessing/10_preprocessing_overview.html#ocular-artifacts-eog

Eye movements

As in eye blinks, eye movements also result from the dipole in the eye. The front of your eye has positive charge. When you turn to a certain direction, positive charge gets accumulated in that part of the face, and negative on the other side.

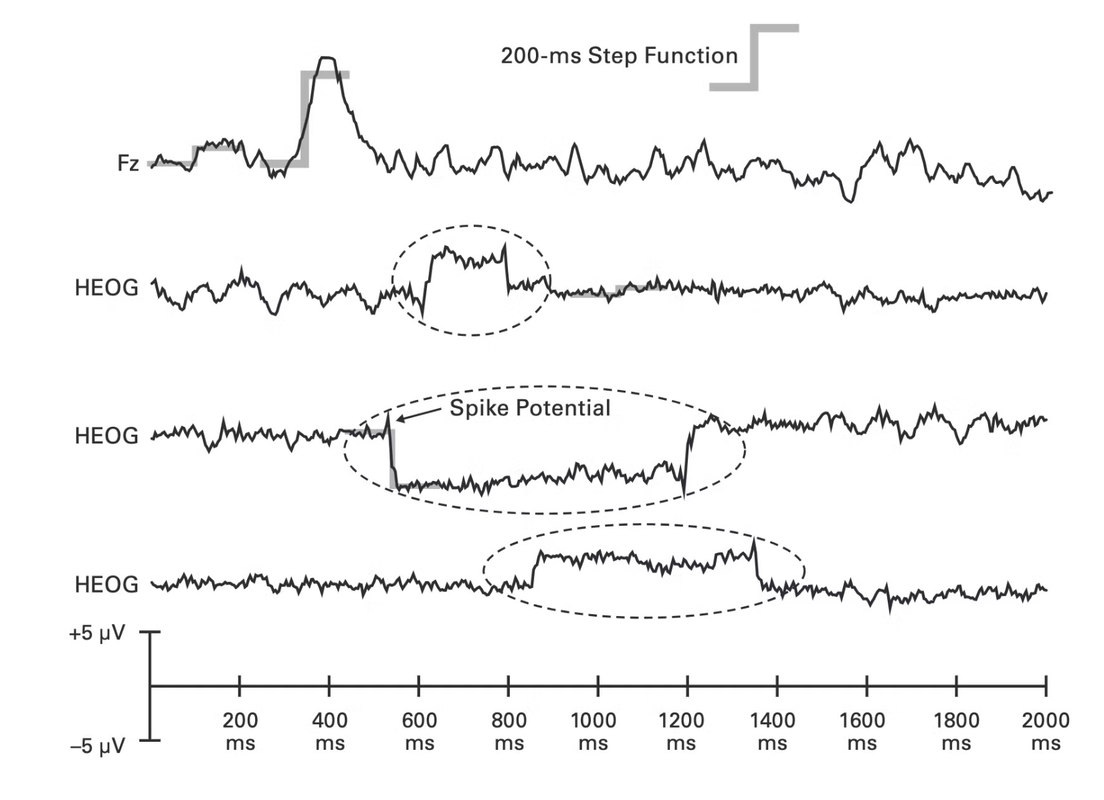

In general, eye movements show a sharp movement deflection in the waveform and then back to original position as below. HEOG stands for horizontal eog.

You can also use the create_eog_epochs() function in MNE. Again, make sure to see if you need to fine tune the function before using it. https://mne.tools/stable/auto_tutorials/preprocessing/10_preprocessing_overview.html#ocular-artifacts-eog

Low voltage drifts

Low voltage drifts are caused by small movements in the position of the electrode, which can be due to the subject moving during the experiment or sweat. The change in movement causes impedance to change, leading in sustained shift in voltage.

It often looks something like this, and can be detected by visual inspection

It’s a good idea to zoom out in your program and observe these drifts. You can remove these by applying a high pass filter and making sure the subject does not move much during the experiment. (Normally 0.1Hz, but may be subject to change based on your data)

Power line noise

For detecting power line noise, first convert your data into frequency domain. Then, notice if there is any large spike at the 60Hz mark. Apply filter to remove this. EZ

Muscle and heart activity

Muscle and heart activity can create significant artifacts in EEG recordings. Muscle artifacts are typically caused by tension in facial or neck muscles, which can produce high-frequency noise in the EEG signal. These artifacts are often characterized by sudden, sharp spikes or sustained periods of high-frequency activity.

Heart activity, particularly the QRS complex of the heartbeat, can also introduce artifacts into EEG recordings. These are known as electrocardiogram (ECG) artifacts and appear as regular, rhythmic spikes in the EEG data.

Speech can also create artifacts due to the movement of facial muscles and changes in skull pressure. These artifacts are often more complex and can vary based on the individual and the type of speech.

To detect and remove these artifacts, MNE-Python provides several functions. For muscle artifacts, you can use create_eog_epochs() or create_ecg_epochs() to identify epochs containing these artifacts. For heart-related artifacts, the find_ecg_events() function can be useful. These functions help in identifying and potentially removing segments of data contaminated by muscle and heart activity.

It's important to note that while these artifacts can be problematic, they also contain valuable physiological information. Therefore, careful consideration should be given to the balance between artifact removal and preservation of relevant neural activity.

Independent Component Analysis (ICA)

Independent Component Analysis (ICA) is a powerful technique used in EEG data processing to separate and remove artifacts from the signal. It's particularly useful for dealing with complex artifacts that are difficult to remove using simple filtering methods.

Here's how ICA works in the context of EEG data cleaning:

- Decomposition: ICA decomposes the EEG signal into statistically independent components. Each component represents a source of activity, which could be brain activity or an artifact.

- Identification: After decomposition, components that represent artifacts (e.g., eye movements, muscle activity, or heartbeats) are identified. This can be done manually by visual inspection or using automated methods.

- Removal: The identified artifact components are then removed from the data.

- Reconstruction: Finally, the cleaned EEG signal is reconstructed using the remaining components.

ICA is particularly effective for removing ocular artifacts (like eye blinks and movements) and can also help with other types of artifacts that have consistent spatial patterns.

In MNE-Python, you can perform ICA using the mne.preprocessing.ICA class. Here's a basic example of how to apply ICA:

from mne.preprocessing import ICA

# Create ICA object

ica = ICA(n_components=20, random_state=97)

# Fit ICA

ica.fit(raw)

# Plot ICA components

ica.plot_components()

# Apply ICA to the raw data

raw_clean = ica.apply(raw)

Other methods include:

https://mne.tools/stable/auto_tutorials/preprocessing/35_artifact_correction_regression.html

https://mne.tools/stable/auto_tutorials/preprocessing/50_artifact_correction_ssp.html

Oscillations

An neural oscillation is the rhythmic and/or repetitive electrical activity generated spontaneously and in response to stimuli by neural tissue in the central nervous system. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3811101/#:~:text=The term “brain (or%20neural,processes%20has%20become%20increasingly%20evident.

The following discusses some common neural oscillations.

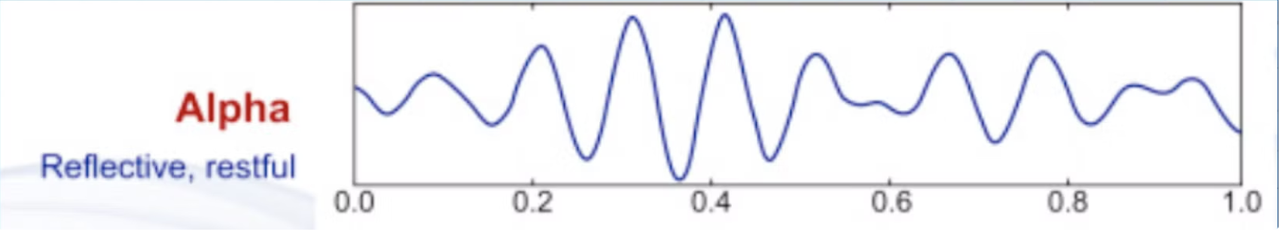

Alpha waves

Alpha waves are a type of brainwave associated with a relaxed yet alert state of consciousness. They typically have a frequency range of 8 to 13 Hertz (Hz) and are often observed when a person is awake but in a calm and unfocused state, such as during meditation or daydreaming. Alpha waves are characterized by regular, smooth oscillations and are commonly found in the posterior regions of the brain. Their presence can be an indicator of a relaxed mind.

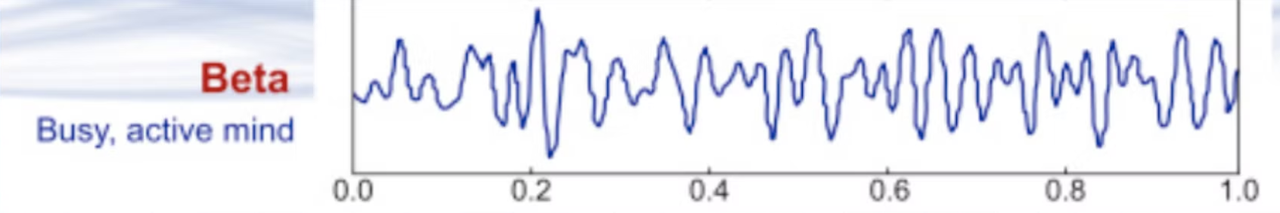

Beta waves

Beta waves are higher-frequency brain waves ranging from 13 to 30 Hz. These waves are associated with active, alert, and focused mental states. When you're awake, engaged in problem-solving, or concentrating on a task, your brain often generates beta waves. They are prevalent in the frontal and central regions of the brain and are linked to cognitive functions like attention, decision-making, and active thinking.

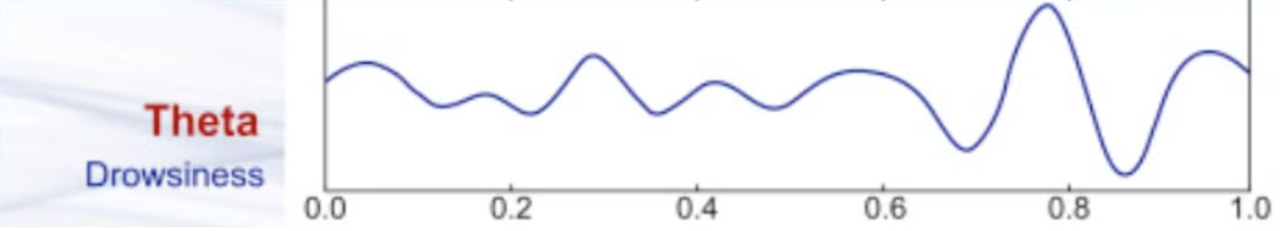

Theta waves

Theta waves have frequencies ranging from 4 to 7 Hz. They are commonly found during light sleep, meditation, and deep relaxation. Theta waves are also associated with creative thinking, intuition, and the early stages of memory formation. When you experience vivid dreams during REM (rapid eye movement) sleep, your brain often produces theta waves, contributing to the dream experience.

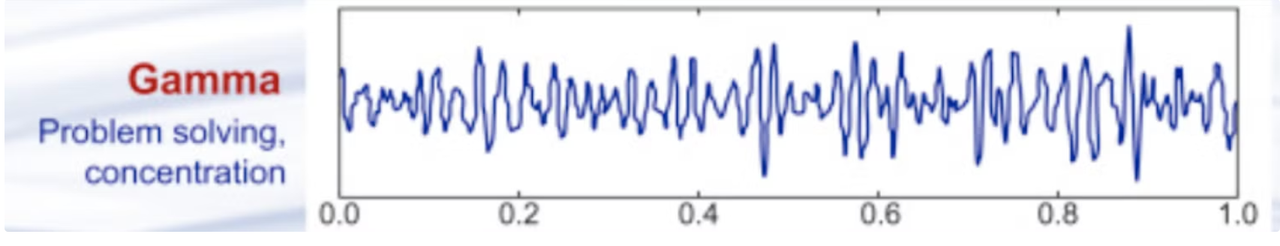

Gamma waves

Gamma waves are the fastest brainwaves, with frequencies exceeding 30 Hz. They are thought to play a role in complex cognitive processes, such as memory, perception, and consciousness. Gamma waves are often associated with the binding of sensory information and the integration of different brain regions' activities. Their presence is vital for forming coherent thoughts and experiences. Please note that it is currently impossible to record true gamma oscillations from scalp EEG; this is typically achieved through intracranial recordings.

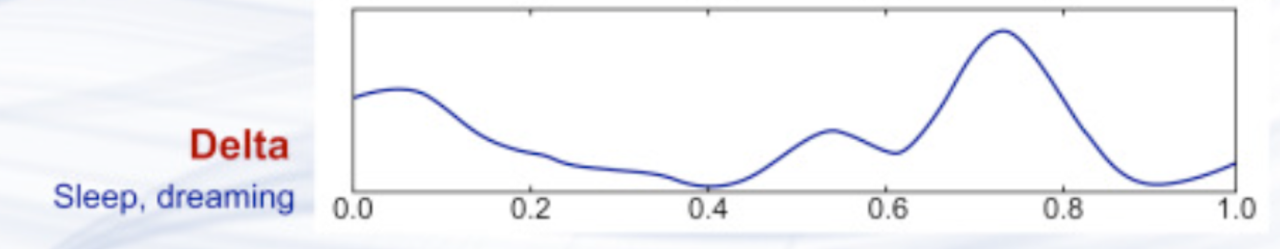

Delta waves

Delta waves are slow brain waves with frequencies below 4 Hz.

They are predominantly observed during deep sleep stages, such as Sleep Stages 3 and 4.

Important note: While frequency bands are often labeled with specific ranges (e.g., alpha: 8-13 Hz), the function of oscillations in the same frequency range can differ depending on the brain region and the context in which they occur.

For example, Mu oscillations (8-13 Hz) over the motor cortex are related to motor functions, such as motor planning, execution, or the inhibition of movement. In contrast, alpha oscillations (8-13 Hz) over the visual cortex are associated with attention and sensory processing, often linked to the suppression of visual input when not actively engaged in a visual task.

This highlights that the same frequency band (e.g., 8-13 Hz) can reflect different processes depending on the brain area and context. So, while the frequency range is consistent, the meaning and function of those oscillations are not. Frequency bands don't necessarily always map strictly to specific neural processes; the spatial location and task demands are critical in determining their role.

Filtering

Let us now discuss filtering. Before we discuss filtering, let’s make sure we understand well exactly what is going on when we look at a waveform in our program.

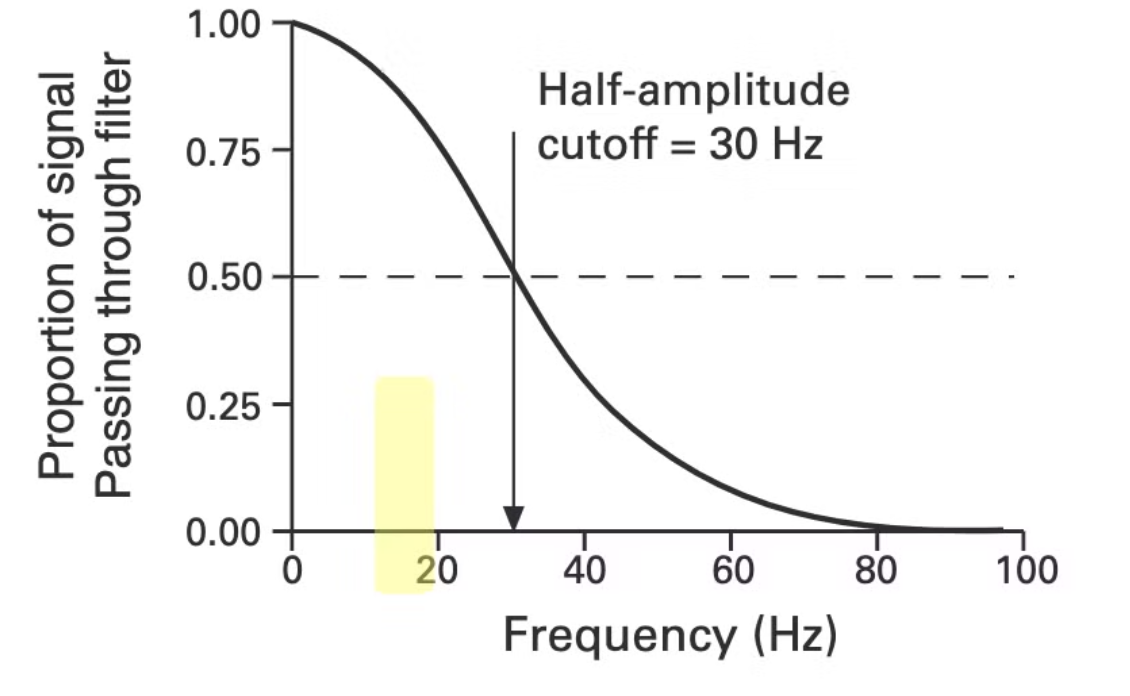

A filter suppresses or passes frequencies, which can be used to remove noise and isolate certain frequencies. A filter can be represented as follows:

This is called the frequency response function of the filter. This function shows exactly what how much of the frequency it will let pass from the waveform. For example, when we convert our waveform to the frequency domain, the frequency 30Hz will get halved, and the frequency 80Hz will get removed, ie filtered out.

Now that we know what a filter is, let’s talk about some different types of filters and how to use them.

Low-pass filter: A low pass filter, or a high block filter, is a filter that allows frequencies lower than the specified frequency to pass and blocks any filters higher than the number.

High-pass filter: A high pass filter, or a low block filter, is a filter that allows frequencies higher than the specified frequency to pass and blocks any filter lower than the number.

Notch filter: Removes one frequency and passes the rest.

Band-pass filter: Combination of low pass and high pass. You can define the low end and high end of which frequencies you want to pass or keep.

When should you filter?

In general, it is a good idea to filter between 0.1-30Hz. The 0.1Hz helps fix the drifts as discussed earlier in our experiment, and the 30Hz can help counteract muscle movements and line noise, however should change this based on your experiment. Here are some more special cases to help you select a filter:

- If you are looking at slow or late components, you can consider using 0.1Hz or 0.05Hz

- If you are looking at responses which happen under 50ms such as the auditory brainstem response, you should use higher cutoff frequencies for both low-pass and high-pass filters. You should do some research before selecting them.

Citations

Luck, Steven J. An introduction to the event-related potential technique. MIT press, 2014.

https://www.researchgate.net/figure/The-event-related-potential-ERP-technique-A-raw-EEG-record-is-punctuated-by-the_fig3_299472589

https://www.researchgate.net/figure/Brain-wave-samples-with-dominant-frequencies-belonging-to-beta-alpha-theta-and-delta_fig1_364388814

https://mne.tools/stable/index.html

https://www.youtube.com/watch?app=desktop&v=-71ppPF02qw

Overview of EEG Experimental Design

By Sofia Fischel and Grace Lim for Neurotech@Davis

General Structure of EEG Experiments

The general structure of EEG experiments consists of four main components: stimuli, trials, participants, and responses. Stimuli is a physical item or event that the participant is reacting to. It can take any form, but usually the type of stimuli informs the design of the experiment. For example, some common stimuli presentations are the Posner cueing task, Stroop test, and detection of stimuli (using luminance). The type of stimuli used must be specific to purposely not elicit any unwanted behavior. These are known as sensory confounds, which will be covered later in this article. A trial defines how long a participant is presented with a stimulus (stimulus onset) and their response. More importantly, the researcher needs to think about how many trials will be done by the participant. Typically, a set number of trials is defined by a block. For example, you may have 50 trials within a block and have 10 blocks total. The reasoning for splitting it up like this is to organize your data, especially if you plan to manipulate some stimuli within blocks or simply to have a time to give the participant a break. There is no commonly set amount of trials, so it’s best to refer to previous research done on a similar topic to figure out this aspect of design. Another component of the experiment is the participants. The researcher must consider how many participants they will have complete the experiment as well as the demographic. If you are not interested in a specific subset of the population, it is best to diversify the participant pool (age, sex, location, etc…). Another thing to consider is that participants may choose to drop out of your study at any time, as well as not be able to meet some benchmark, causing you to exclude them from the final results. The last aspect of the experiment is the response, which is defined by how the participant is responding to the stimuli. This can be intentional through a keyboard click or less intentional by passively viewing their EEG results while they view the stimuli. In order to calculate things like reaction time or accuracy, you will need to have an intentional response.

This is a very general outline of what is needed for your experiment, so we will go more into detail about each topic later on in this article.

Overview

A well-designed experiment (for our consideration) will include the following characteristics:

“As simple as possible to operate for the experimenter and easy to reproduce for later researchers”$^{1}$.

Simplicity can be summarized as ease of collection. To average out the noise, many trials per participant are required. The more complex the trial, the more difficult it is to collect enough usable data. A little on the nose, I’ll keep this explanation as you should keep your design and trials: short and to the point!

Reproducibility is important in two regards: during successive repetitions conducted by your team and, as stated above, for those outside your team as well. You should be able to replicate similar results across numerous data collection periods; whether that be across different participants running the same trials, or the same participant across numerous days, there is no use in a procedure that yields inconsistent results.

Additionally, your experimental design is not the place to cut corners; though your group may understand exactly what you mean when you write something like “participants will look at the stimulus for 30 seconds,” others with no experience viewing your exact procedure will be left confused and bewildered. Research and projects are meant to be seen by others! A good, standard rule of thumb is to imagine that you have never seen your project before and, with only the experimental design as a guide, you must replicate its results fully.

In practice, components of your experimental design may look something like this

- Paradigm or particular wave of interest

- Applicable brain regions involved

- Key electrode regions outlined (and a brief explanation for their inclusion)

- Layout of blocks/trials

- Includes stimuli [specifically for exogenous BCI designs, or designs that utilizes brain activity that is generated in response to external stimuli)] + participant response [determine if passive (viewing a screen, attending to a noise, etc.)] or active (verbal response, button press, etc.)]

- Determine length (SOA, ITI, etc.) + number of trials

- Includes practice blocks (if necessary to accommodate to any experiment specific tasks + practice timing of any responses)

- Includes break/rest periods

Participants

In an attempt to ensure replicability beyond the confines of the experiment, an increased sample size is ideal to better replicate the general population as a whole. There is a trade off between open-field application of a project (increasing sample size) and ease of collection (decreasing sample size). Though of course it is easy to idealize the perfect list of candidates for an experiment, it is best to consider participant time constraints, as trials may be rerecorded numerous times given data usability.

Ethical Issues

Please ensure a basic level of respect and awareness for all participants. There should be no undue mental strain nor physical harm applied, and a fundamental principle from standard procedures will hold: “Each participant has the right to withdraw from the experiment without any reason or penalty”.

Electrode Placement

By Grace Lim for Neurotech@Davis

This figure depicts the International 10/20 System for electrode placement, which describes an Internationally recognized mapping for electrodes placed on the scalp. The letter describes the area of the brain the electrode is located, so for example, the “P” in P7 would stand for parietal lobe. The number describes the hemisphere and position of that electrode. For our purposes, the OpenBCI cyton board we use has the capacity for 8 electrodes, so if we wanted to use this map you would measure the distance from the nasion and inion using a measuring tape for standardization.

This map will come in handy as you focus on what kind of experiment you will create because you will want to focus your limited amount of electrodes on the area of interest. For example, if you were interested in studying visual attention for your EEG experiment, you would want to place electrodes on parietal and occipital regions. Areas near the frontal or temporal lobes wouldn’t be as important.

Impedance, Skin Potentials, Sweat

By Grace Lim for Neurotech@Davis

As you may know by now, if you are not careful in your collection of EEG data, most of what you are recording can be quite plainly crap. What I mean by crap is impedance to the electrode, which is “a combination of resistance, capacitance, and inductance and the properties of the skin, electrode gel, and the electrode can influence all three of these qualities” (Luck, 2014, Chapter 5). As the name suggests, there are many things that can impede the quality of the signal from the recording electrode. Most commonly this is the outermost layer of skin which includes oil and dead skin cells. “To assess the extent to which current can flow between the scalp and the recording electrode, it is important to measure the impedance rather than the resistance” (Luck, 2014, Chapter 5). Impedance is measured in ohms and as this measure increases, the ability to reject common mode noise decreases. This just means that the more impedance to the signal there is, the harder it is to reject the noise present from all electrode channels. The skills needed to physically reduce impedance are covered in the previous article on Intro to EEG.

Skin potentials are described by Luck as “a tonic voltage between the inside and the outside of the skin, and magnitude of this voltage changes as the impedance changes, thus if the electrode impedance goes up and down over time, this will lead to voltages that go up and down in EEG recording” (Luck, 2014, Chapter 5). This is why it is important to abrade the patch of skin the electrode will be placed on; to reduce the impedance from the skin potentials. You would do this by applying Nuprep or some other abrasive gel to the site of interest (usually the forehead for the frontal electrodes and the mastoids for your references) then wipe it off before putting the electrode on.

“The final major factor in determining the impedance between the outside of the skin and the electrode is sweat” (Luck, 2014, Chapter 5). The reasoning for this is actually quite intuitive if you are familiar with the properties of sweat; sweat contains salts making it conductive. Luck describes this issue as “the very gradual shifts caused by hydration of the skin are not ordinarily a problem, but the faster changes caused by the sweat glands can add random variance to the EEG and reduce your ability to find statistically significant effects” (Luck, 2014, Chapter 5). If you have the chance to view EEG data contaminated by sweat, it is actually obvious when it is occurring because it causes all affected channels to shift downwards. The way this is practically circumvented is making sure your participant is not hot while recording.

In addition, you can actually use this property to your advantage through the Galvanic skin response if you are interested in studying stress. What GSR is, is a measuring the skin’s change in electrical properties (sweat) while the participant is emotionally aroused. This is quite similar to what lie detector tests do! It is important to note however that GSR is not performed on the scalp, so it is not considered EEG and is instead used on someone’s hand/fingers.

Importance of Clean Data

By Grace Lim for Neurotech@Davis

Having clean data is one of the most important considerations for your EEG experiment, second only to participant safety (but we wont get into that now). The reasoning for the important of clean data is to save you time and money. By having clean data in the first place, you can reduce the amount of recording sessions you need to do; whether this is the amount of trials or number of participants being run. By have less sessions, you save lots of time and resources (electrode gel isn’t cheap). Besides these points, it is also just good practice as this is the standard for labs that publish papers. If you’re trying to impress your PI, you want to spend some time learning why and how to have clean data.

You may be tempted to just increase the amount of trials used in your experiment to circumvent the problem of bad data, but this is actually counterintuitive as “increasing the number of trials eventually has diminishing returns because the effect of averaging on noise is not linearly proportional to the number of trials” and “The noise decreases as a function of the square root of the number of trials in the average” (Luck, 2014, Chapter 5).

Now that you know why having clean data is important I will describe how to have clean data while you are recording, in addition to the preparation stage. A good amount of noise in the EEG recording can be attributed to skin potentials, which describes “the tonic voltage between the inside and outside of the skin. In addition, magnitude of this voltage changes as the impedance changes, thus if the electrode impedance goes up and down over time, this will lead to voltages that go up and down in EEG recording” (Luck, 2014, Chapter 5). A few steps to decrease noise due to skin potentials are to: use Nuprep, a type of abrasive gel used to prep the skin, having your participant wash their hair prior, having a cool room to reduce the amount of sweat produced, and removing any makeup on the skin where the electrode will be placed. Now to describe ways to reduce noise during recording: use medical tape to hold down electrodes (usually on the mastoids if referencing there), reducing any movements, ensure that the reference and ground electrodes are not being interfered, and giving the participant breaks to help them not get sleepy.

Sources of Noise + Ways to Minimize Them

By Sofia Fischel for Neurotech@Davis

Read more about artifacts here (includes practice and an atlas reference showing specific EEG recordings)

Much of the noise in EEG signals comes from “other biological signals such as skin potentials and from other non biological electrical noise sources in the environment” (Luck, 2014, pg. 149)

Source: Electrical devices in the recording environment.

Explanation: “The flow of current through a conductor is always accompanied by a magnetic field that flows around the conductor. Moreover, if a magnetic field passes through a conductor, it induces an electrical current. These two principles are illustrated in figure 2.1, which shows what happens when a current is passed through one of two nearby conductors. The flow of current through one of the conductors generates a magnetic field, which in turn induces current flow in the other conductor. This is how electrical noise in the environment (e.g., the 120 V in the video display) can induce electrical activity in an ERP subject, in the electrodes, or in the wires leading from the electrodes to the amplifier”. Additionally, electrical noise is present from electronic devices in the recording environment: phones, computers, etc., as well as power lines.” (Luck, 2014, pg. 38)

Figure 1, source: Luck, 2014

Solution: Though it is impossible to remove all environmental noise, placing nearby devices on airplane mode may reduce some. Further, applying a notch filter (at 60 Hz) to remove ambient electrical current that may remain from additional sources occurs during preprocessing.

Source: Biological signals from the participant

Source: Blinks

Explanation: “Within each eye, there is a large, constant electrical potential between the cornea at the front of the eye and the retina at the back of the eye (the corneal-retinal potential), which is like a dipole with positive at the front of the eye and negative at the back of the eye. This potential spreads to the surrounding parts of the head, falling off gradually toward the back of the head.” (Luck, 2014, pg. 194) “When the eyes blind, the eyelid moves across the eyes, which acts as a variable resistor that changes the EOG voltage recorded from electrodes near the eyes”.

Additional explanation: blink

Solution: One possibility is to ask participants not to blink during critical periods of the task and then provide cues for periods when they can blink freely.” However, “[a]s both blinking and spontaneous eye movement are automatic behaviors, withholding either of them requires voluntary attention that might interact with task performance as well as introduce EEG signal.” In fact, instructing participants to not blink may contribute to a lessened response: “Ochoa and Polich directly tested the possibility of dual-task interference by giving subjects an oddball task and either telling them to avoid blinking or saying nothing at all about blinking. The P3 had a smaller amplitude and a longer latency when subjects were asked to avoid blinking.” (Luck, 2014, pg. 211). In practice, spotting a blink within the raw EEG signal is not difficult; while recording, you can remind participants to limit blinks — to the best of their ability — though they may just be an artifact removed during preprocessing.

Source: Eye Movements

Explanation: “Eye movements are a result of the strong dipole inside the eye. When the eyes are stationary, this dipole creates a static voltage gradient across the scalp, which is removed by baseline correction and high-pass filters. When the eyes move, the voltages becomes more positive over the side of the head that they eyes now point toward “ (Luck, 2014, pg. 198)

Additional explanation: lateral eye movements

Solution: If eye movements are believed to contaminate your data, using a central fixation point (and instructing participants to keep their vision focused upon it) is one solution. Further, the characteristic shape of “real eye movevements” (a “boxcar-shaped voltage deflection” resulting from “subjects mak[ing] a saccade from the fixation point to some other location and then … another saccade to return to the fixation point”) helps in making it distinct from noise, improving pre-processing ease (Luck, 2014, pg. 198-9).

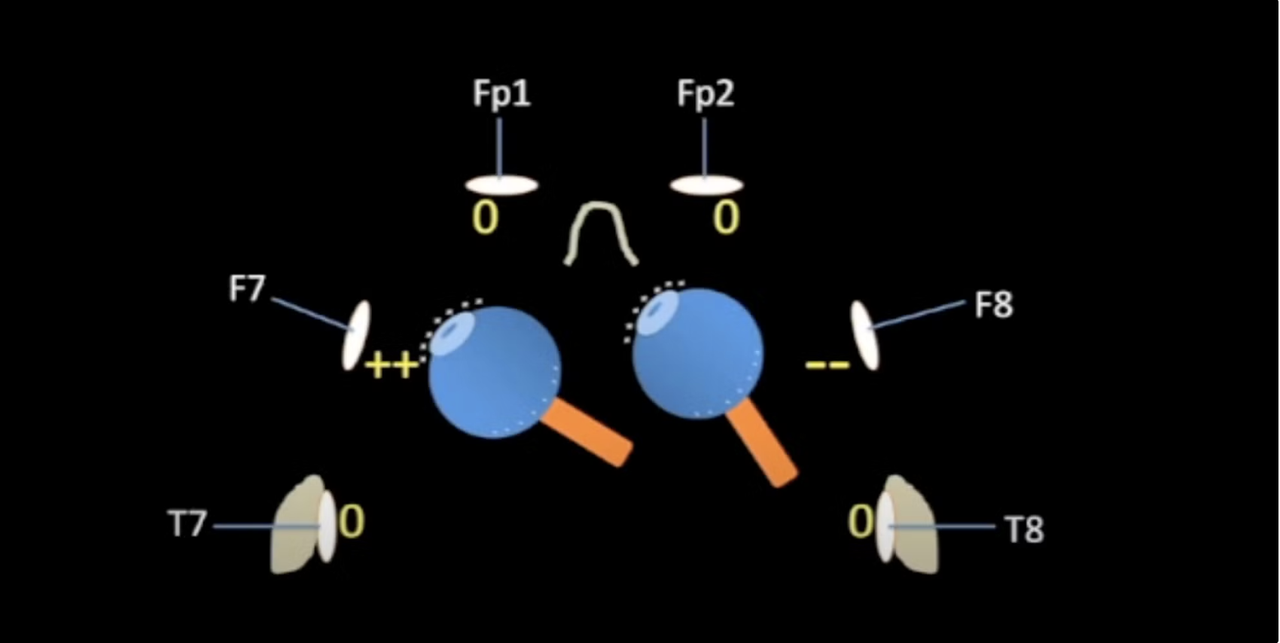

Source: EMG signals

Explanation: “The temporalis muscles are powerful muscles that we use to contract our jaws, and they are located right under the T7 and T8 electrodes [see figure 2 below]. If you see a lot of EMG in this region of the head, you can ask the subject to relax his or her jaw and avoid teeth-clenching. The temporalis muscles are so large, however, that you will see small but consistent highfrequency EMG artifact at T7 and T8 throughout the session in some subjects, even when they try to relax.” (Luck, 2014, pg. 205)

“The muscles of the neck are the remaining common source of EMG noise. If a mastoid reference is used, this activity may be picked up by the reference electrode and therefore appears in all channels that use this reference. If a different reference site is used, EMG noise arising from the neck appears at the most inferior occipital and temporal electrode sites. It can usually be minimized by asking the subject to sit straight upright rather than leaning the head forward. Neck EMG can also be minimized by having the subject sit back in a recliner with the head leaning against the recliner, but this can cause artifacts in the occipital electrodes.” (Luck, 2014, pg. 206)

Additionally, “It turns out that there is a strong electrical gradient between the base of the tongue and the tip of the tongue. Consequently, when the tongue moves up and down in the mouth, it creates large voltages that propagate to the surface of the head. These voltages are called glossokinetic artifacts” (Luck, 2014, pg. 207)

Additional explanation: chewing and hypoglossal movement, muscle

Solution: Instruct participants to remain as still as possible, reacting only in ways that align with their specific task response. Additionally, try to avoid trials that necessitate head movements, verbal responses, or any sort of movement beyond a stationary position. “[Neck muscles] can usually be minimized by asking the subject to sit straight upright rather than leaning the head forward. [They] can also be minimized by having the subject sit back in a recliner with the head leaning against the recliner, but this can cause artifacts in the occipital electrodes” (Luck, 2014, pg. 206). “When such [movement] tasks can not be avoided, one should try to plan the experiment so that the periods of movement do not overlap with critical periods of data collection.”$^{9}$ Of course, if your project necessitates movement of some kind (e.g., looking between different screens, etc.) attempt to limit it as much as is feasible (i.e. eye movement instead of head movement). Otherwise, if the project truly necessitates a large movement, plan accordingly for the applicable noise.

IMPORTANT TIP: Incidental movements are almost a given. Record timestamps of these movements, as this will better facilitate the preprocessing phase if you are able to quickly locate EMG signals.

Source: Fatigue

Explanation: Participants may grow fatigued from a long trial length or repeat exposure to repeat stimuli. This can be an issue two-fold: their response to the stimulus may decrease as their mind wanders and they grow fidgety and restless, increasing noise.

Solution: Keep recording sessions on the shorter side. Additionally, it is recommended to give participants breaks in between trials.

Source: Electrode or Cable movement